We often talk about hybrid cloud business models, but virtually always in the context of traditional processor-bound applications. What if deep learning developers and service operators could run their GPU-accelerated model training or inference delivery service anywhere they wanted?

What if they could do so without having to worry about which Nvidia graphics processor unit they were using, whether their software development environment was complete, or whether their development environment had all the latest updates?

To make that happen, Nvidia’s GPU Cloud, aka “NGC,” pre-integrates all the software pieces into a modern container architecture and certifies a specific configuration for Amazon Web Services. Nvidia will certify configurations for other public clouds in the future.

Nvidia built NGC to address several deep learning challenges. Foremost is that deep learning developers have been having trouble staying current with the latest frameworks and optimizing their frameworks for the GPU hardware they have deployed.

Nvidia has the added challenge of transparently upgrading its current deep learning customers to use Volta’s Tensor Core capability as new Volta-based GPU products enter the market.

Tensor Cores gives deep learning developers significantly more performance for matrix math than Nvidia’s stock GPU pipelines provide, but developers must know to target Tensor Cores when they are running on Volta GPUs. NGC does that automatically.

Integration and Tuning

NGC just makes sense. It is a logical extension of Nvidia’s GPU-accelerated cloud images, which the company developed for internal use in much the same way that AWS and other cloud giants have deployed new services.

Nvidia’s own developers were challenged in creating process flows for Nvidia’s internal projects. They needed to cope with the complexity of maintaining optimized versions of multiple deep learning frameworks. Different frameworks serve different applications and markets — including drones, autonomous cars and trucks, internal chip development simulations, etc.

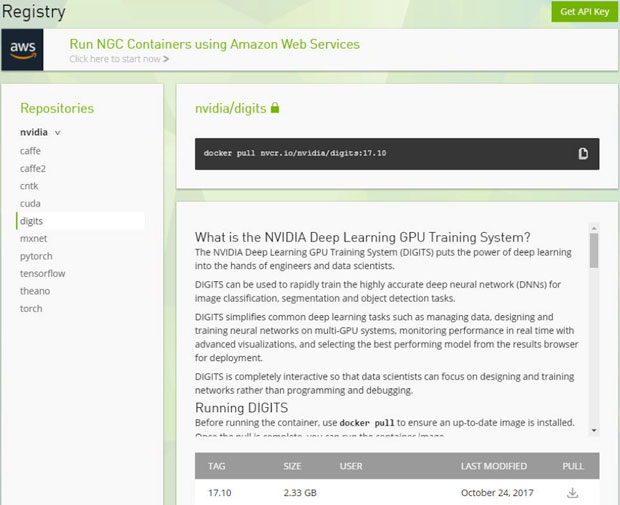

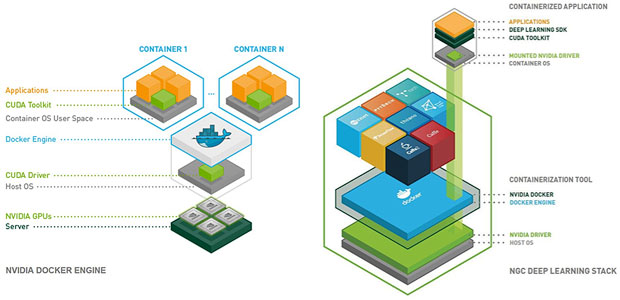

NGC is not a simple aggregation of deep learning software development tools and frameworks in a container. There are several key components to NGC — Nvidia GPU drivers, the container, development tools, CUDA runtimes, Nvidia libraries, configuration files, environment variables, configuration management and certification.

All of these components are important for Nvidia to deliver NGC as a turnkey service on multiple public clouds. Plus, given the rapidly evolving state of deep learning tools, Nvidia has committed to upgrade and recertify its NGC container images on a monthly schedule.Nvidia initially will tune, test and certify NGC to work with Amazon EC2 P3 instances using Nvidia Tesla V100 GPUs, and with Nvidia’s DGX Systems using Nvidia Tesla P100 and V100 GPUs.

Nvidia’s certification provides a guarantee that all the solution components are integrated properly and tuned for the best possible performance. There are other deep learning instances available in the AWS Marketplace, but they are not tuned, tested, updated or certified by Nvidia.

Nvidia-Docker Project

Two years ago, Nvidia open-sourced its development project to containerize its CUDA software development environment plus runtime OS, executables and libraries for Nvidia GPUs. The result is the GitHub project named “Nvidia-docker” (version 1.0.1 was released in March 2017 and version 2.0 is now in alpha test). Nvidia sometimes refers to Nvidia-docker as “nv-docker,” but they are the same thing.

Scaling from an on-prem PC or workstation to an on-prem Nvidia DGX-Station or DGX-1 server (from a single GPU card to clusters of eight NVLink-connected Pascal or Volta GPUs chips) is possible by starting with the open source Nvidia-docker distribution and then moving to a certified NGC instance.

Scaling from on-prem to public cloud can be done in the same manner. The biggest change in moving from Nvidia-docker to NGC is that Nvidia maintains NGC docker images for developers instead of developers having to update their own Nvidia-docker images.

Microsoft may be next in supporting NGC on Azure. There is an Azure deployment thread in the Nvidia-docker repository. The deployment thread is based on Azure’s N-Series virtual machine. Given that Nvidia already has positioned NGC as multicloud, well… we’ll see what the future holds.

First, Microsoft would have to announce a new N-Series VM based on Nvidia’s Volta products. Microsoft previewed Azure’s NCv2 instance using Nvidia’s P100 GPUs. Further, Azure is mentioned in its NGC Users FAQ, so it’s not a stretch to expect V100 VMs in the not-too-distant future. Nvidia then would have to certify a known good configuration of Nvidia-docker for Azure.

The Nvidia-docker project also provides limited build support for IBM’s Power architecture. Given IBM’s work in scaling Nvidia GPU cluster performance, Nvidia-docker support might create interesting options for GPU containers in the OpenPOWER ecosystem in 2018.

DIY Developer-Friendly

The Nvidia-docker container runs on x86 host instances using Ubuntu 16.04 and CentOS 7 OSes. Nvidia developed host OS drivers that can run multiple virtual GPU images, so that a single-host OS can manage multiple containers virtually, accessing multiple GPU chips across many GPU sockets or add-in cards.

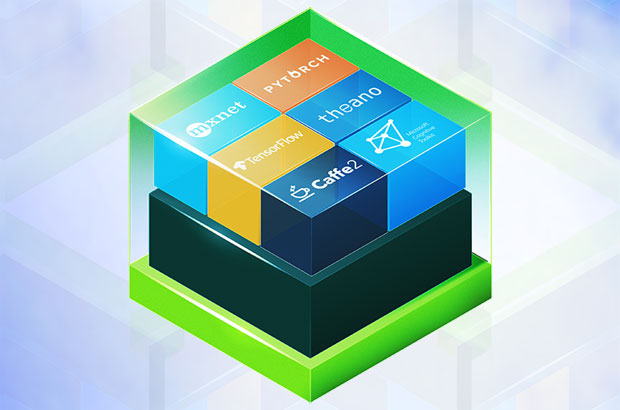

NGC initially supports most major deep learning training frameworks: Caffe/Caffe2, CNTK, MXNet, Torch, PyTorch, TensorFlow and Theano. Trained models can be loaded into Nvidia’s TensorRT for inferencing.

The open source version of NGC’s underlying containers and the developer tools are available to anyone running at least an Nvidia Titan-level GPU card and a Linux host operating system. This allows do-it-yourself developers with large local datasets, security or compliance concerns to begin developing deep learning models on prem, with the pre-integrated open source version on PCs and workstations. These DIY developers then can migrate to Nvidia’s certified instances on major public clouds as their needs grow.

The opposite migration also can happen. Some deep learning developers may start by using public cloud instances, but as their utilization rates and cloud service costs rise, they might invest in dedicated infrastructure to bring their workload on prem.

NGC’s optimized containers cannot be redistributed by other system vendors (outside of Nvidia’s DGX, AWS and future public cloud partners), but end-users can register for NGC and then download NGC containers to each Nvidia-accelerated system or cluster they use, without support. However, HPC customers are much more likely to customize Nvidia-docker instances for their specific hardware architectures.

NGC is intended to streamline deep learning development on widely available Nvidia-accelerated system configurations spanning desktop to public cloud.